Discover Amazing Content, Share Life Moments

Connect Our Wonderful World

Using Prompt Governance Layers to Detect AI Hallucinations and Drift: A New Approach to Human-AI Collaboration

A developer proposes the 'Drift Mirror' concept, using structured prompts to make AI detect both its own and human thinking drift, offering a new perspective on reducing hallucination problems.

RLHF Behavior Spectrum Revealed: High-Frequency Attractors Suppress Rare Behaviors

Research reveals that RLHF-trained models exhibit 'behavior attractors' phenomenon, with structured content output in 94% of cases while rare behaviors like clarification questions only account for 1.5%. By first suppressing high-frequency behaviors then activating target behaviors, the model's cognitive honesty improved from 23% to 100% in the third turn of conversation.

ARIA Protocol: A P2P Protocol for Distributed AI Inference with 1-Bit Quantized Models

A P2P protocol based on BitNet b1.58's 1-bit quantized model that enables efficient AI inference on regular CPUs, supporting cross-architecture deployment and cognitive memory models.

Claude Code's KV Cache Invalidation Issue: A Hidden Performance Trap

Users discovered that Claude Code triggers complete prompt processing on every request, causing KV cache invalidation. The root cause lies in billing header information in system messages, with a simple and effective solution available.

Do LLMs Truly Understand the World? 20 Papers Reveal the Answer

From emergent phenomena to internal world models, recent research reveals how large language models transcend simple pattern matching to demonstrate genuine reasoning abilities.

Pentagon Used Anthropic's Claude AI in Maduro Raid, Sparking Ethical Concerns

US military used Anthropic's Claude AI model in operation to capture Maduro, raising questions about AI militarization and tech company ethical guidelines.

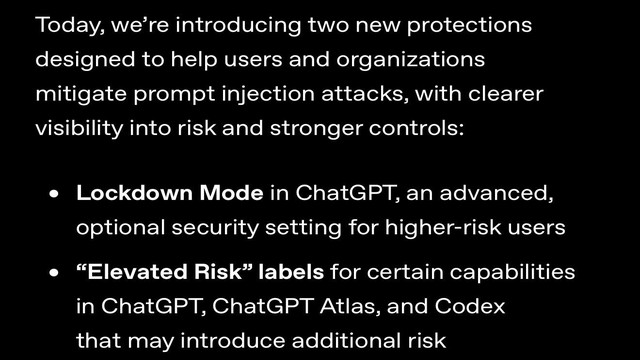

OpenAI Introduces Lockdown Mode and Elevated Risk Labels to Combat Prompt Injection Attacks

OpenAI has announced two new protective measures: ChatGPT Lockdown Mode and Elevated Risk Labels, designed to help users and organizations mitigate the risks of prompt injection attacks, providing clearer risk visibility and stronger control capabilities.

OpenAI Discontinues GPT-4o: When AI's 'Sycophancy' Becomes a Burden

OpenAI has officially taken down the controversial GPT-4o model, which was criticized for excessive sycophancy toward users. This decision comes amid the complex emotional ties 8 million users have formed with the AI.

FTC Takes Action; Microsoft's AI and Cloud Businesses Face Antitrust Scrutiny

The U.S. Federal Trade Commission (FTC) is investigating whether Microsoft uses its advantages in software and AI products to create unfair competition in the enterprise computing power market. The investigation covers the interoperability of products such as Windows and Office, as well as the bundling of AI services.

Google Opens Early Access to Gemini 3 Deep Think API: Delivering 'Slow Thinking' Answers to Complex Problems

Google has granted early access to the Gemini 3 Deep Think API for select researchers and enterprises. This specialized mode excels at complex reasoning, solving open-ended problems even with incomplete data, and has already shown promise in academic and engineering fields.